Modern Java in Action 3 - collection API

New API (in Java 8)

Collection classes got a few nice additions.

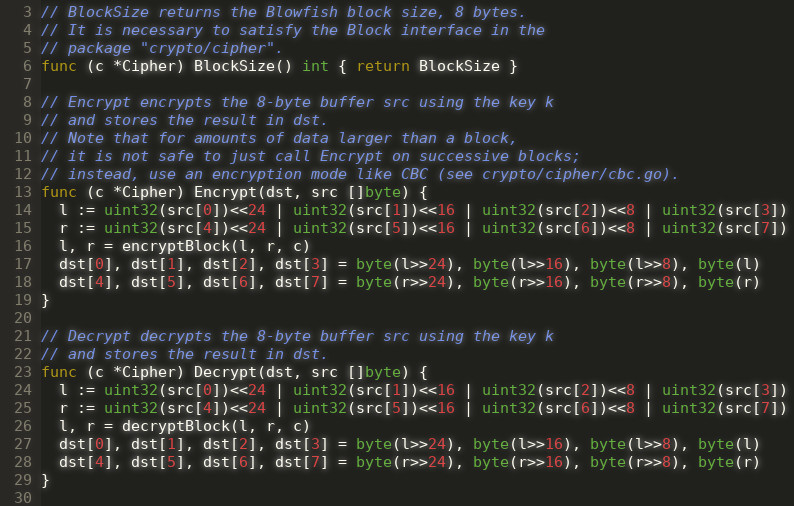

Factory methods

They create immutable collection (if you try to add/remove elements, you get UnsupportedOperationException. There is no varargs variant - this variant would require additional array allocation, which non-varargs variants don’t have.

There are overloads from 1 to 10 elements.

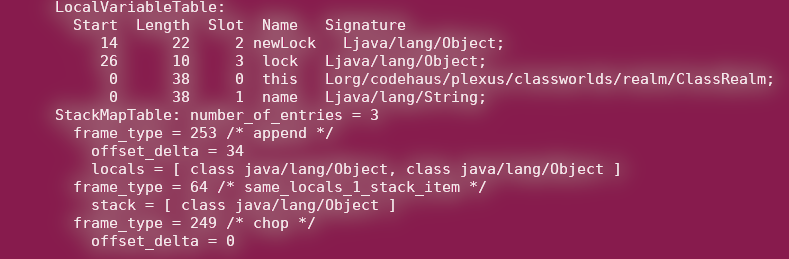

|

|

lists: removeIf, replaceAll

On a list you can use removeIf and replaceAll